The Stock That Soared 2,500%: How a Canadian Manufacturer Became AI’s Secret Ingredient

How a century-old IBM subsidiary became the shovel-seller extraordinaire of artificial intelligence

When you ask ChatGPT to teach you how to make beef bourguignon, that French stew you have been dying to master, it immediately spits out a step-by-step process. Sear the beef. Add the bacon, mushrooms, and carrots. Do not forget the tomato paste. Slowly braise it all with red wine. Simple enough. But here is a question few people stop to ask: What is actually powering ChatGPT while you are frantically chopping vegetables at 7 PM on a Tuesday?

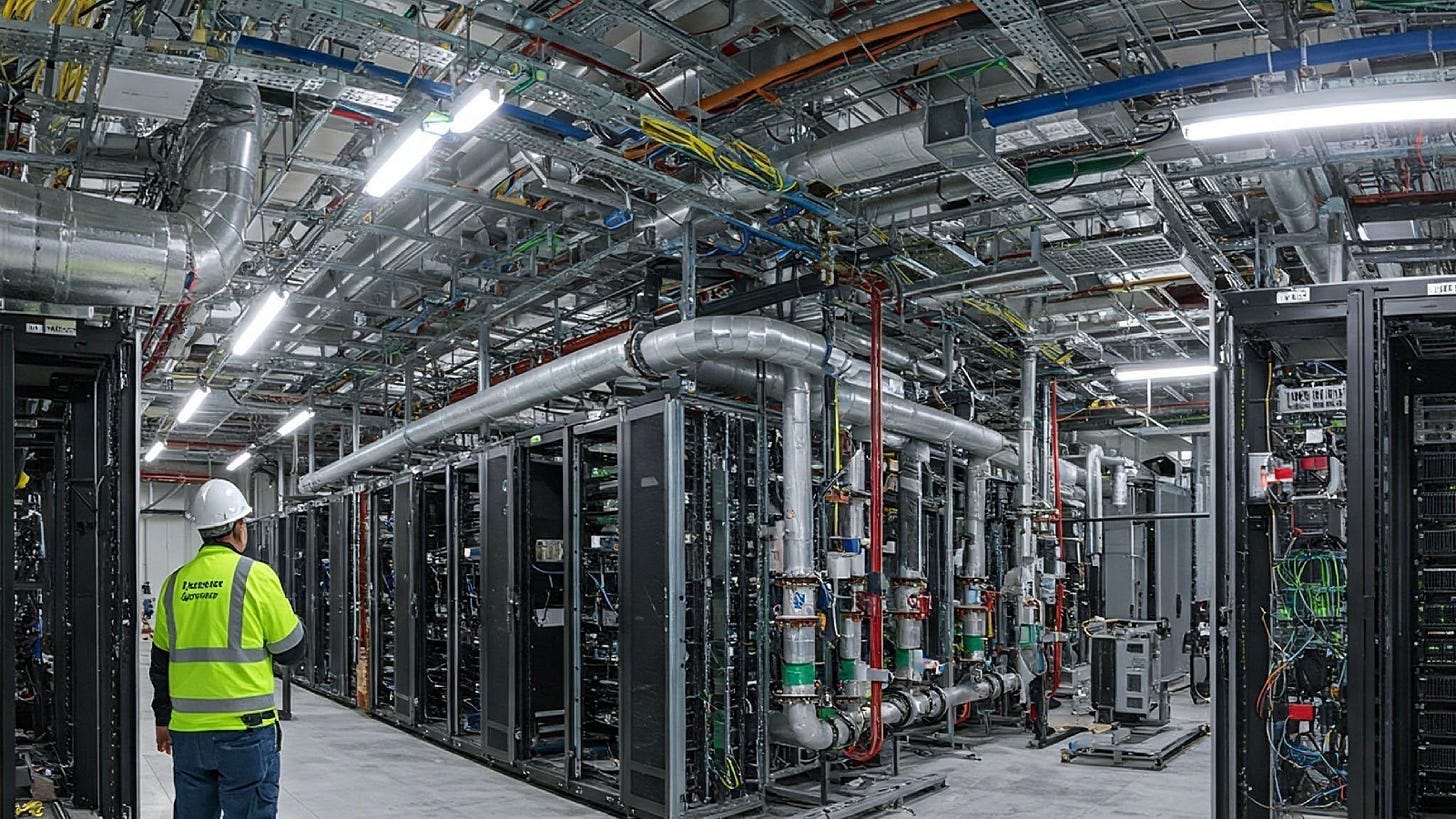

The answer involves clusters of thousands of graphic-processing units (GPUs), those specialized computer chips made famous by Nvidia, humming away in vast data centers, performing trillions of calculations per second. All that computing generates so much heat that these facilities need industrial-grade cooling systems just to keep from melting down.

Most people know about Nvidia by now. The company has become shorthand for the AI boom, reaching a market capitalization that made it the world’s most valuable company. Nvidia’s story, selling the picks and shovels to the AI gold rush, has been told and retold. But there is a Canadian company in this ecosystem that is far less famous: Celestica.

If Nvidia sells the shovels to AI miners, Celestica sells them the entire prospecting kit that includes the tent, the pickaxe, the water canteen, the map, and instructions on how to use it all. The company does not just manufacture servers that house Nvidia’s GPUs. It designs and builds complete “rack-scale solutions”—entire floor-to-ceiling cabinets that integrate networking cables, computing power, storage systems, and advanced liquid cooling into single, deployable units. These are not off-the-shelf products. They are bespoke systems engineered to squeeze every possible watt of performance out of the hardware while preventing the whole thing from overheating.

The numbers tell a remarkable story. Celestica’s revenue hit $12.2 billion in 2025, up from $10.7 billion initially projected, with earnings per share growing 58% year-over-year in 2024. The company’s stock price has climbed over 2,500% from its 2023 lows, giving it a market capitalization hovering around $38 billion as of early 2026. What makes this ascent particularly striking is that Celestica started as a commodity manufacturer that industry observers once dismissed as a business that “sucks,” plagued by single-digit margins and no apparent competitive edge.

Celestica’s origin story reads like a series of fortunate accidents. The company traces its lineage back to 1917 as the manufacturing division of IBM Canada. For decades, it quietly produced mainframes and enterprise hardware, the kind of unglamorous work that keeps corporate IT departments running. The turning point came in 1994, when IBM, like many tech companies of that era, decided to divest its internal manufacturing operations to focus on software and services. Celestica incorporated independently and went public in 1998, right before the dot-com bubble burst.

What followed was a brutal decade. The company found itself stuck in what business strategists call a “commoditized” market, providing low-value-added services with razor-thin profit margins and little pricing power. It was a contract manufacturer that built what clients specify and hope to make a few percentage points of profit.

In 2015, Rob Mionis became CEO of Celestica and initiated what turned out to be a decade-long metamorphosis. His strategy was straightforward but difficult to execute: shift away from low-margin consumer electronics and toward high-complexity technology solutions.

The company reorganized into two main divisions. Advanced Technology Solutions (ATS) serves aerospace, defense, healthcare, and industrial markets, sectors that value reliability over rock-bottom prices. But the crown jewel is Connectivity and Cloud Solutions (CCS), the division that is riding the AI capital expenditure wave. It focuses on communication and enterprise markets, providing the complex networking and compute hardware that modern data centers require.

The real magic happens within CCS through something Celestica calls Hardware Platform Solutions (HPS). Here is where the company departs from typical contract manufacturing by owning the product designs. In Q3 2025 alone, HPS revenue reached approximately $1.4 billion, representing a 79% increase from the previous year. This includes high-performance Ethernet switches, storage arrays, and server platforms that Celestica designs, engineers, and controls from conception to deployment.

This ownership model creates what business professors call “switching costs.” Once a hyperscaler, industry jargon for mega-cloud providers like Amazon Web Services (AWS) and Google Cloud, designs its infrastructure around Celestica’s custom platforms, ripping everything out and starting over becomes prohibitively expensive. It is like building a house with custom-sized bricks; switching to a different brick manufacturer means rebuilding the entire structure.

In 2024, Celestica earned Dell’Oro Market Share Leader Badge Awards for both Ethernet Switch, AI Networks and High-Speed Networks (≥ 800G), an achievement that essentially crowns it king of the very fast plumbing that connects AI computers together.

Let’s demystify what this means. Modern AI systems do not train on single computers, they use thousands of GPUs working in parallel, constantly sharing information. The speed at which they can communicate determines how fast your AI model learns. This is where Ethernet switches come in, as they are the traffic controllers directing data packets between computing nodes at unfathomable speeds.

The crown jewel of Celestica’s switch portfolio is the DS5000, a beast of a machine delivering 51.2 terabits per second of switching capacity. To put that in perspective, you could stream about 10 million 4K movies simultaneously through a single DS5000 switch. But Celestica did not stop at 800-gigabit switches. The company recently introduced the DS6000 and DS6001, its new 1.6-terabit Ethernet switches providing 102.4 terabits per second of switching capacity, double the capacity of previous generations. These switches are not just faster; they use advanced Broadcom Tomahawk 6 chipsets built on cutting-edge 3-nanometer semiconductor technology, representing the absolute bleeding edge of what is physically possible with silicon today.

Wall Street has a term for companies that consistently exceed expectations and then raise their forecasts: “beat and raise.” Celestica has mastered this playbook. In Q3 2025, the company achieved revenue of $3.19 billion and adjusted earnings per share of $1.58, both exceeding guidance ranges, prompting management to increase full-year revenue expectations to $12.2 billion from $11.55 billion.

The company’s capital structure looks remarkably healthy, especially compared to the debt-fueled expansion of some AI infrastructure companies. Celestica maintains over $300 million in cash with a current ratio, a measure of short-term financial health, of 1.47, well above the industry average of 1.17. For comparison, some specialized AI data center operators like CoreWeave carry current ratios below 0.50, meaning they have more short-term debts than assets to pay them, a precarious position.

Hyperscalers now represent 77% of Celestica’s CCS revenue as of 2025, up from 51% in 2022. Two customers in the CCS segment individually account for more than 10% of total revenue each. This concentration makes Celestica extraordinarily sensitive to the spending patterns of a handful of tech giants. The risk is real and quantifiable. If Amazon, Microsoft, Google, or Meta suddenly slash infrastructure spending, Celestica would feel it immediately. The entire AI infrastructure sector lives or dies by quarterly guidance from these behemoths, and any hint of a pullback in capital expenditure would send shockwaves through equipment suppliers.

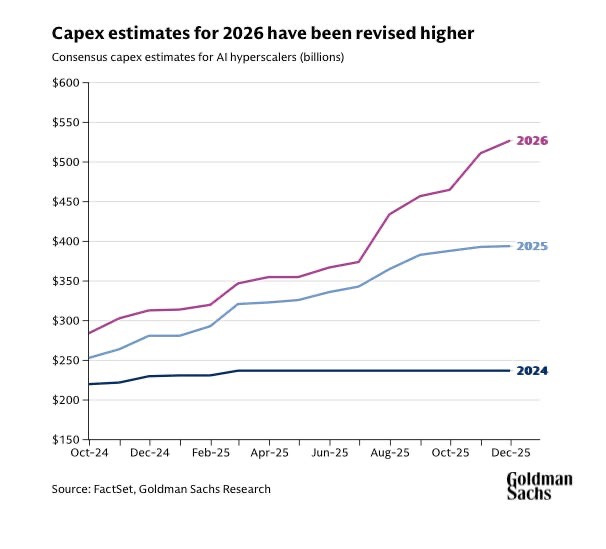

Yet the counterargument is equally compelling. Combined hyperscaler capital expenditure is expected to approach $600 billion in 2026, according to the investment bank Goldman Sachs, representing a roughly 36% year-over-year increase, with some companies dedicating 45-57% of their revenues to infrastructure spending. These companies face a prisoner’s dilemma, as whoever blinks first and cuts spending risks falling hopelessly behind in the AI race.

The competitive dynamics have become almost Darwinian. Goldman Sachs Research notes that consensus capital expenditure estimates have proven too low for two consecutive years, at the start of both 2024 and 2025, estimates implied roughly 20% growth, but actual spending exceeded 50% in both years. This persistent underestimation suggests analyst skepticism has been systematically wrong, and spending may continue surprising to the upside.

Celestica does not operate in a vacuum, of course. Arista Networks, a pure-play networking vendor, dominates the market for standardized switches with gross margins above 40%, more than four times Celestica’s roughly 10% margins. Other contract manufacturers like Jabil and Sanmina compete for similar business, though they spread their efforts across more diversified markets.

To differentiate itself, Celestica has made a calculated bet: go all-in on AI infrastructure at the expense of other opportunities. It is a high-risk, high-reward strategy that is paying off spectacularly, for now. Recent market research shows that vendors with higher exposure to AI back-end networks significantly outperformed the overall market, with companies like Accton and Celestica capturing the highest market share growth.

For all its recent success, Celestica faces existential questions that extend beyond quarterly earnings. The AI monetization problem looms large. Tech giants are spending like drunken sailors on AI infrastructure, but tangible revenue from AI products remains elusive for many. If 2026 arrives without clear evidence that these investments generate returns, market sentiment could reverse violently. Equipment suppliers would be the first casualties.

Then there is technological disruption—networking standards evolve, and today’s 800-gigabit switches could become obsolete faster than expected if new architectures emerge. Celestica must continue investing heavily in R&D to stay ahead. There is no resting on laurels in semiconductor-adjacent businesses.

Margin compression presents another challenge. As competitors scale up, pricing pressure intensifies. Celestica’s margins, while improving, remain far below pure-play networking vendors. The company must continually prove its custom solutions justify premium pricing.

Celestica’s CEO Rob Mionis recently told CNBC: “If AI is a speeding freight train, we are laying the tracks ahead of it.” It is an apt metaphor. The company is not building the AI itself or even the chips that power it. Instead, it is constructing the essential infrastructure that makes large-scale AI deployment physically possible. Data from Dell’Oro Group shows that Ethernet data center switch sales surged over 40% in Q1 2025, marking the strongest growth since the market began being separately tracked in 2013.

This is not a cyclical uptick, but rather a structural shift in how computing infrastructure gets built. Whether AI lives up to its transformative promise or takes longer to deliver returns, the infrastructure being laid today will define the next era of computing. For now, the tracks are being built faster than ever before.